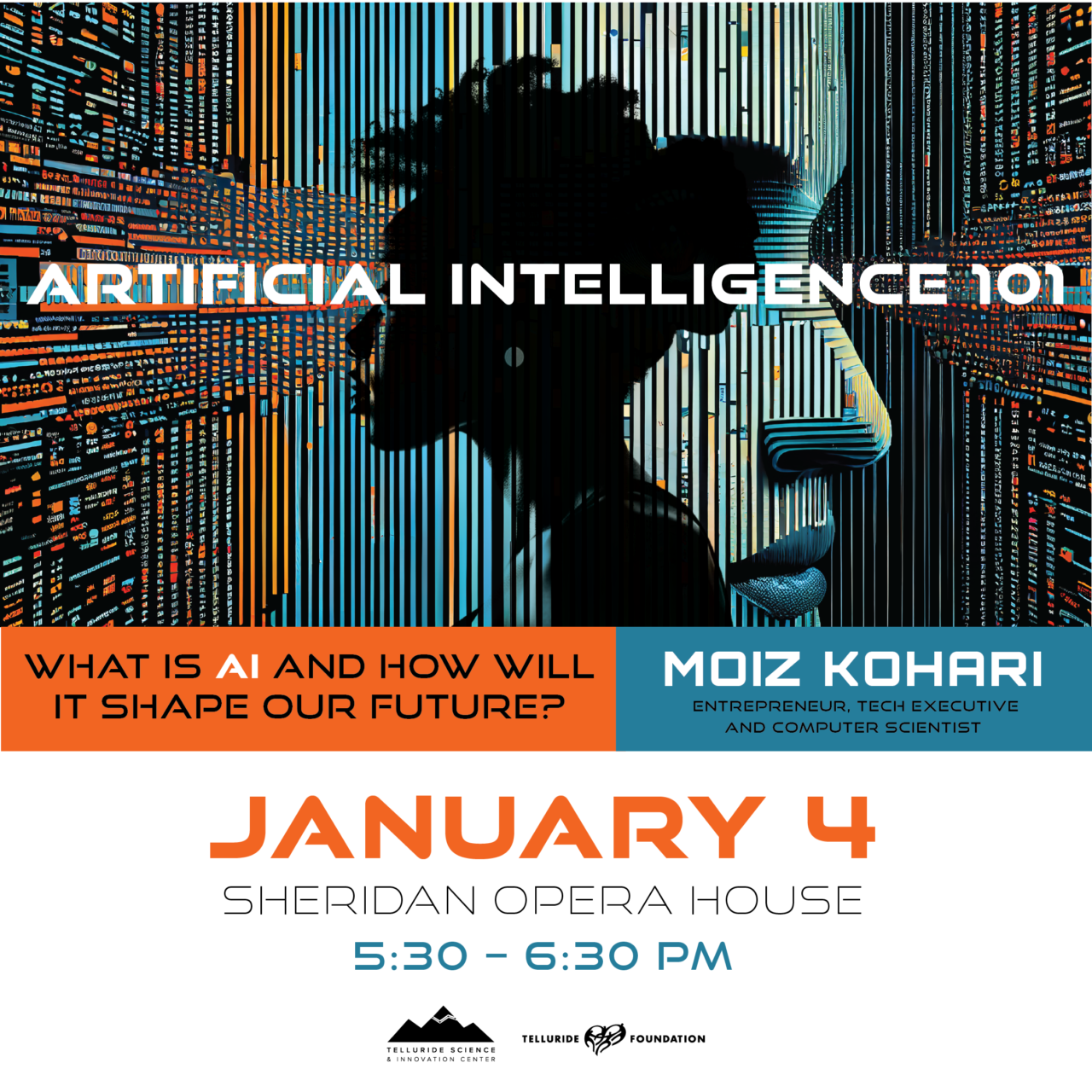

01 Jan Telluride Science & Foundation: “Artificial Intelligence 101” with Moiz Kohari, 1/4!

Telluride Science and the Telluride Foundation host local Moiz Kohari for a program titled “Artificial Intelligence 101.” The event is FREE and open to the public.

Visit telluridescience.org to learn more about the non-profit organization and the capital campaign to transform the historic Telluride Depot into the Telluride Science & Innovation Center. The venue will be permanent home for Telluride Science and a global hub of inspired knowledge exchange and development where great minds get to solve great challenges.

Go here for more on Telluride Science.

Go here for more on the Telluride Foundation.

November 30, 2022 is a date destined to go down in history.

According to online sources, the very first working AI programs were written in 1951 to run on the Ferranti Mark 1 machine at the University of Manchester, a checkers-playing program written by Christopher Strachey and a chess-playing program written by Dietrich Prinz.

Fast forward now to November 30, 2022, when OpenAI launched the chatbot, ChatGPT or Chat Generative Pre-trained Transformer. The AI technology enables users to refine and direct their thoughts towards a particular length, format, style, level of detail, and language.

Netflix had to wait around 3.5 years to reach 1 million users. According to OpenAI, ChatGPT acquired 1 million users just five days after letting that cat – or chat – out of the box.

Since, then, love it or hate it, everyone who is awake and aware of the Zeitgeist is now paying attention to AI. Almost overnight, a new crop of AI tools has found its way into products used by billions of people, changing the way we work, shop, create and communicate with one another.

For better.

Or for worse.

Advocates tout the technology’s potential to supercharge productivity, creating a new era of better jobs, better education and better treatments for diseases.

Skeptics have raised concerns about the technology’s potential to disrupt jobs, mislead and possibly bring about the end of humanity as we know it. They say advocates are sugarcoating a variation on the theme of the apocalypse.

And Silicon Valley execs? They and others in the tech space seem to hold both sets of views at once.

Confused?

Telluride local Moiz Kohari is an entrepreneur, tech executive and computer scientist with over 40 years of experience in those disciplines – the recent 12 of which are in AI, Machine Learning and other related spaces.

On January 4, 5:30 – 6:30 p.m., Telluride Science and the Telluride Foundation are hosting a talk titled “Artificial Intelligence 101,” scheduled to take place at Telluride’s Sheridan Opera House. Moiz is charged with parsing the subject for attendees. His goal is to break down the science and its ramifications in plain-speak.

To prep for the January 4th event and by way of background, check out Telluride Inside …and Out’s (TIO) (email) interview with Moiz.

TIO: What exactly is Artificial Intelligence?

MK: If a system – biological or digital – responds to a query then I suggest there is some level of intelligence present. We decided to call digital intelligence “artificial” even though we created this form of intelligence. Are our pets who respond to certain commands considered to be a form of intelligence?

Artificial Intelligence refers to a computer system with the ability to mimic human cognitive functions such as learning and problem-solving. That said, back in 1955, John McCarthy coined the term, famously writing: “Any aspect of learning or any other feature of intelligence can, in principle, be so precisely described that a machine can be made to simulate it.”

At the most basic level computer systems have always been used to store data, then manipulate and query this data to deliver some level of intelligence. We have tried for decades to capture aspects of reality, which is the definition of data. The more data we have access to, the better we can train algorithms to deliver more precise answers to any given query.

Think about a self-driving car interpreting surrounding conditions to react appropriately, such as driving safely to a destination. Problems occur when unexpected things happen such as an elk running in front of the vehicle. If however the car’s algorithms know how to deal with that specific scenario then it can easily avoid the animal.

That grossly simple explanation leads us to believe that if all possible data is made available to a computer system it could refine its algorithms to tackle any event. Of course we know that is never the case and therefore not possible.

TIO: Who is credited with founding AI and why did the technology to make devices and software think intelligently like a human mind come about?

MK: For this answer we return to John McCarthy. His work at MIT with Marvin Minsky and others laid the foundation of what we recognize as AI. Alan Turing’s universal Turing Machine is a mathematical model of the modern computer and his paper from 1950 “Can machines think?” was one of the earliest thought-leadership papers on AI.

Truth is we all stand on the shoulders of giants. Not sure if a single person can or should be credited with the creation of an entire discipline.

TIO: How does an AI system or any deep learning model get built? My understanding is that data and results get loaded incrementally, in baby steps.

MK: First and foremost we need to understand that the goal of these seemingly esoteric systems is simply to create real intelligence. The only way we humans know how to do that is to model such systems on our own image, namely and more specifically on how the human brain works.

Many AI systems today leverage something known as a neural network. The system learns and trains from a set of “test” or initial data, then evolves to handle more complicated environments. This evolution mimics the way a human child develops over time to understand and respond to external stimuli, ultimately handling more and more new inputs.

AI systems are trained on training, test and real-time data, very much like a child evolves by being exposed to one month of experiences, then six months, then 24 months, etc.

TIO: What are some practical applications of AI today?

MK: Most aspects of our daily lives leverage AI capabilities and have been deployed for over a decade now.In short, they are not something new. Let me share some mundane examples. While texting, your phone may try to complete your sentence. Ads for products you may have thought you wanted to search for, but had not even queried, show up on your desktop. An airplane or car maneuvers to avoid a collision. Your credit card company knows that a certain transaction was fraudulent and advises you of same.

And so on…

TIO: Is there any doubt the underlying technology will continue to power meaningful advances in products and services for years to come? The fear is AI will eliminate millions of jobs. The hope is it will help improve how millions do their jobs. Is your truth a bit of both? If yes, which jobs, which industry (and therefore which workers) will be most affected?

MK: My personal spin on this subject is that about 80 percent of jobs we know today from the C-level on down the pyramid will disappear in the short-term or about five years; in the longer term, not much more than 10 years.

Here’s one example. At the London Stock Exchange my team and I created a trade surveillance system based on Machine Learning or ML. The exercise took 10 people about nine months just to create a simple data flow pipeline and some additional algorithms to deploy. Today, a single person could create the same complex pipeline in a day, or max in one week.

AI technology and tools have only accelerated in their development over the past few years and I see this momentum continuing. While highly skilled workers will be needed to fine-tune and leverage these systems for some time to come, most mundane jobs will no longer require human intervention.

My hope is that this phenomenon will motivate us all to pursue more challenging activities that support human growth.

TIO: Why is everyone talking about AI now? Is the short answer Chat GPT?

MK: Yes, ChatGPT brought sophisticated query functionality to the masses. You can ask ChatGPT – or similar Large Language Models or LLMs – to summarize mounds of data in a matter of seconds. Customized LLMs help doctors and lawyers and engineers search through millions of documents to provide pertinent information in a matter of seconds.

We are truly making unimaginable amounts of information available in real-time, a powerful use of AI. Google has been a big influence on our lives for awhile now, but LLMs will have an exponential effect on productivity, made available to us at almost no financial cost – at least none that are apparent.

TIO: Is ChatGPT an example of generative AI at work? If yes, how does generative AI operate? And how is generative AI different from AGI (or Artificial General Intelligence)? It is my understanding that OpenAI CEO Sam Altman has teased the possibility of a super-intelligent AGI that could go on to change the world or perhaps backfire and end humanity as we know it.

MK: Generative AI refers to a system learning from language – audio or vision-based input to predict the best answer to a query. AGI is much bigger and bolder than any task-specific generative AI model – a sentient being. Ethical use of AI will force conflict with commercial interests. We already witnessed that over the drama surrounding Sam Altman, initially fired by the Board of OpenAI, then financial interests reinstating him and replacing the Board.

Appropriate guard rails (or regulations) are necessary to ensure that we don’t cause unintended harm to humanity. However, regulations without strict enforcement will not yield results. If there were no threat of enforcement how many people would abide by speed limits?

To protect humanity one of my favorite authors – Issac Asimov – wrote the “Three Laws of Robotics”:

“A robot may not injure a human being or, through inaction, allow a human being to come to harm. A robot must obey orders given to it by human beings except where such orders would conflict with the First Law. A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.”

I have not seen implementation of any such laws in our society. And the longer we wait, the more dangerous our world becomes.

TIO:. So do you see AI as dangerous? Among the more immediate harms we see daily are spreading misinformation, perpetuating biases that exist in training data, and enabling discrimination, spreading propaganda during an election or enabling a scary new era of gnarly scams. Bottom line: Is it fair to say the more AI tools are incorporated into core parts of society, the more potential there is for unintended consequences? It is a well-published fact that regulators in the US and abroad are pushing for legislation to help put guardrails in place for AI, but it is clearly unclear if lawmakers can keep pace with the rapid advances in the field. Is trying to regulate AI like trying to hold mercury in your hands? Thoughts?

MK: I addressed bits and pieces of this subject in my prior answer. All powerful technologies (think nuclear bombs) require rules and restraints. Availability of such technologies without appropriate restraints is no doubt dangerous. Misinformation and biases will lead to a certain type of challenge, whereas direct use of AI on the battlefield for example could lead to different types of consequences such as machines learning that human life is not sacred.

I am not trying to paint a picture of a future in which we will necessarily face extinction, but it does behoove us to think through how best to protect our race. There is no time like the present to implement appropriate thought leadership to prevent a Darwinian dystopia.

TIO: Now let’s get personal. Please describe your path to a career in tech and, of late, in AI. What was – or is – the company you are working with now, MinIO, all about?

MK: MinIO provides extremely large sets of data (unlimited amounts) to be persisted and presented for use in the cloud. Data is the life blood of AI. The quality, quantity and relevance of data used to train, validate and test AI models is fundamental to the operations of any intelligent system. I am currently helping some of the largest organizations – think S&P 100– deliver predictive and LLM models to handle security and decision-making systems.

Eric Schmidt (former CEO of Google and my former employer Novell) famously said: “Every two days, we create as much data as we did from the dawn of civilization until 2003.”

The exact growth trajectory for data may be different, but the statement is correct in highlighting the unprecedented and rapid growth of data.

I feel lucky to be playing a small role in this budding, life-changing world.

Sorry, the comment form is closed at this time.